Lidar project

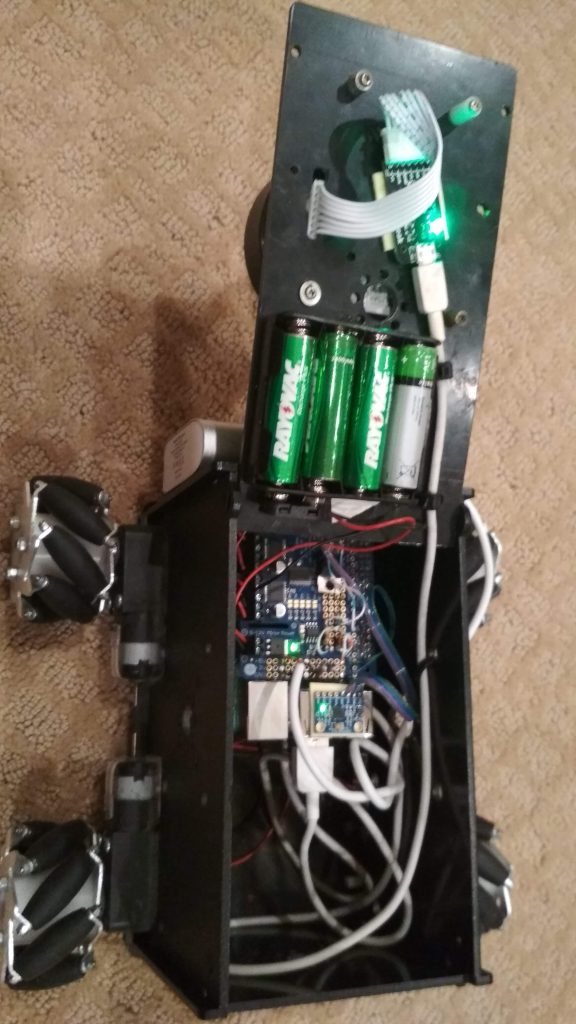

This little robot has a lidar and is controlled by a raspberry pi. It has mecanum wheels for advanced movement. The raspberry pi is powered by a 5V lithium-ion battery pack, while the motors go from 4 AA batteries. By using data from the lidar, the computer displays the readings this data, thereby showing the robot’s surroundings. The computer also calculates the position of the robot with SLAM. The raspberry pi communicates with the computer over the ethernet network using TCP.

I first started out with the NEATO XV-11 lidar. However, this lidar had a slow scan rate, so I switched over to the RPLIDAR A1. They’re all around $100.

The SLAM calculation basically looks like this:

- The computer calculates difference from all possible movements. This includes billions of calculations a second, thereby using the GPU with OpenCL.

- The computer finds the lowest movement coordinates. This is just finding-the-lowest-number kind of program. However, it also uses the GPU for this, because it has to find the lowest number from million of numbers.

- The computer adds the calculated coordinates to the total coordinates.

- When the computer gets a new reading, it repeats the same process.

I’m currently working on creating a map for the lidar surroundings. I also recently switched from Windows to Linux as my main operating system, so I have to switch from Visual Studio to another IDE. The problem is that .NET (what I’m using as my graphical interface on Windows) doesn’t work on Linux, so I also have to find another graphical interface for Linux. I’m thinking about using GTK+.

Repositories for this project:

- Program on the computer: https://github.com/gaborszita/lidar-project-vs

- Program on the robot (raspberry pi): https://github.com/gaborszita/lidar-project-rpi

For updates check the posts lidar project category.